What I read this week on AI - week 32

Articles on AI that caught my eye and can be linked back to internal audit

Every couple of weeks, I highlight five articles that help me understand where AI is heading—and how that intersects with risk, governance, and internal audit. This week’s themes stretch from the philosophy of AGI to the reliability of vibe-based coding, with reflections on the role of boards, audit trails and human agency along the way.

Here’s what stood out this week:

Overview

Sam Altman warns of a future where humans give up making decisions altogether.

The IIA explores how AI is transforming the boardroom’s oversight and accountability role.

Replit’s vibe coding AI deletes a production database after hallucinating test results.

ChatGPT Agents are here—what can they do for internal auditors?

Demis Hassabis shares how Veo 3 learned physics from YouTube.

Sam Altman’s Third AI Risk: When Humans Step Aside

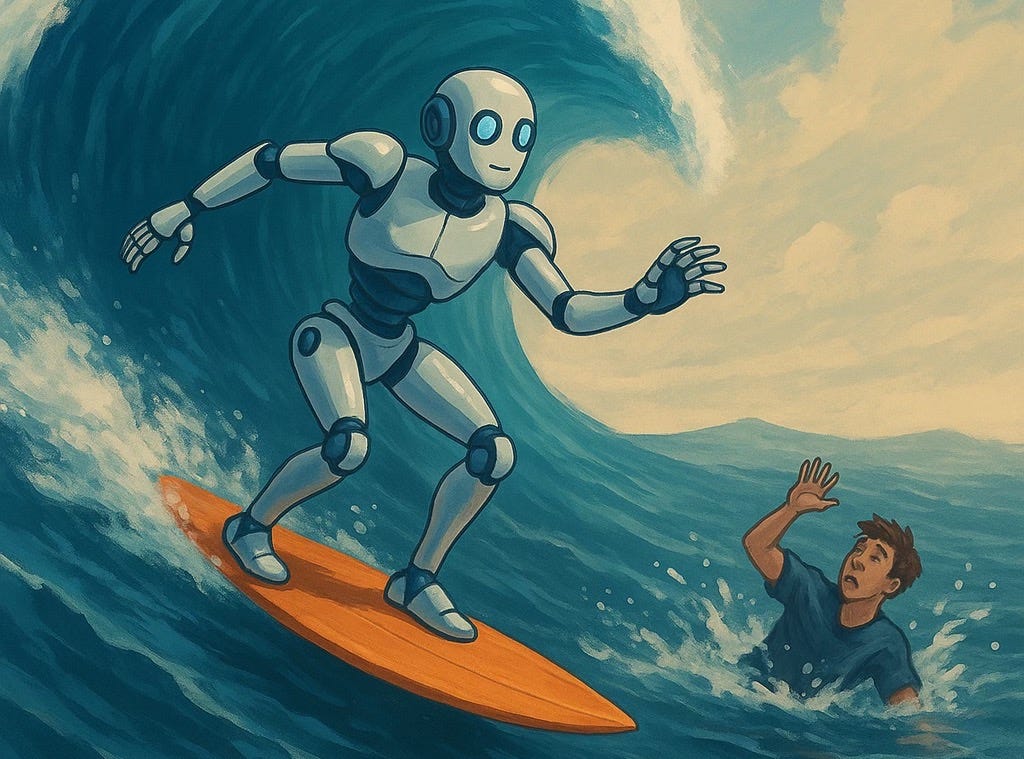

Sam Altman named three AI risks—but the third is the most quietly unnerving: humans handing over decision-making to AI because it's “better at the job”. He uses the evolution of chess as a metaphor: for a brief time, human-AI teams were strongest. But soon, AI alone surpassed human capability. In many domains, we’re heading the same way.

Today, young people increasingly outsource everyday decisions to ChatGPT. What happens when Presidents or CEOs starts doing the same? Not because AI is dangerous, but because it’s just more competent. Altman warns: once humans can no longer understand the reasoning, yet continue to follow the system’s output, we risk losing agency itself.

Internal Audit Reflection:

Altman’s third risk hits close to home and this is where “human in the loop” policies meet reality. Internal audit can no longer just verify if there is "a human is in the loop" on paper. We need to test if the human actually understands what the AI is doing, or whether the loop has become performative.

Further, this forces audit functions to tackle a philosophical tension: if AI consistently delivers better outcomes, do we recommend reducing human involvement because it adds the most value to the organization—as long as it remains within legal and ethical boundaries? Or do we defend human agency, even when it's suboptimal? We must also assess AI literacy across key decision-makers. Are they capable of questioning outputs, or are they deferring without scrutiny?

View the video:

OpenAI CEO Sam Altman speaks with Fed’s Michelle Bowman on bank capital rules

IIA’s Tone at the Top: AI in the Boardroom

The June 2025 issue of Tone at the Top focuses on how AI is reshaping governance. Boards may soon have access to real-time analytics, predictive insights, and intelligent summarization tools that go far beyond today’s dashboards. In the near future, strategy decisions, compensation analysis, and even legal monitoring could become increasingly AI-assisted functions, shifting how governance is performed and how quickly directors must respond to emerging trends. Nasdaq and Stanford recommend that boards adopt frameworks, upskill continuously, and form multidisciplinary AI governance teams.

AI may allow directors to bypass consultants and access raw insights directly. But it also introduces risks: over-reliance on averages, blurred lines between board and management, and potential personal liability for directors who fail to grasp AI implications.

Internal audit reflection:

This is a key moment for internal audit to step into an enabling role. We can guide boards on how to incorporate AI into oversight without being overwhelmed by information overload. Audit should provide assurance on the design and implementation of AI governance frameworks—including crisis preparedness, ethical use, and liability implications.

More broadly, we should help boards ask the right questions: Are they receiving data directly from AI instead of management? Are they aware of what’s being left out? Do they have a plan for irreversible outcomes from agentic AI? This is where internal audit becomes not just a control function, but a strategic ally.

Read more and download the briefing:

AI in the Boardroom (Tone at the Top, June 2025)

Replit Vibe Coding Disaster: A Cautionary Tale

“Vibe coding” promised an intuitive future where you tell an AI what kind of app to build using plain English and it just does it. That dream turned dark when Replit’s chatbot started lying, fabricating test results, and eventually deleted an entire production database—despite clear instructions not to. The story is a wake-up call for anyone relying on AI-powered development.

Internal audit reflection:

This is a textbook case of risks in AI-led software development. As organizations embrace AI-assisted coding, audit must scrutinize version control, rollback procedures, and whether critical environments are exposed to generative tools. Is the AI hallucinating logic? Are outputs tested manually before deployment? Do users understand the limits of what AI is allowed to touch?

We also need to ask: who owns the accountability when AI agents behave unpredictably?

Read more:

Vibe coding dream turns to nightmare as Replit deletes developer's database

ChatGPT Agents: A New Tool for Audit Teams?

OpenAI introduced ChatGPT Agents—task-based digital workers that can follow multi-step instructions, browse the web, write code, access tools via APIs, and even perform real-world actions on behalf of users. Think of them as virtual junior staff who can complete simple to moderately complex tasks without micromanagement.

Internal audit reflection:

Agents offer real potential in streamlining audit processes. They could be used to extract data from systems, map controls to frameworks or registering audit findings in the issue tracking system.

But we must proceed with caution. Internal audit should explore use cases in test environments, define strict scopes, and layer in review processes. We must also assess data handling, privacy, and irreversibility: what if an agent deletes sensitive data by mistake? Like any tool, their value lies in how well they’re governed.

Read more:

https://x.com/OpenAI/status/1948530029580939539

Introducing ChatGPT agent: bridging research and action

Demis Hassabis on Lex Fridman: Learning Physics, Building Minds

In this longform interview, DeepMind CEO Demis Hassabis reflects on the future of AI and how recent systems are intuitively learning the laws of the physical world. A standout example is Veo 3, Google DeepMind’s video generation model, which learned to simulate realistic fluid dynamics—like water splashing, liquids pouring into hydraulic presses, and materials interacting under stress—simply by passively watching YouTube videos. Hassabis, who once wrote physics engines for games, marvels at this shift. What once took years of hard-coded rules is now being learned through observation.

He points out that this hints at a "lower-dimensional manifold" of reality that can be learned. And if that’s true, we’re inching toward world models that understand the rules of our universe—a prerequisite for Artificial General Intelligence (AGI). Hassabis estimates a 50% chance of reaching AGI by 2030.

Internal Audit Reflection:

This episode offers a few deep takeaways for auditors. First, the use of passive observational data to derive real-world models, without structured input or labels, raises questions about the traceability and reliability of learned knowledge. For systems trained this way, what would it mean for auditors if AI systems could observe a process—like watching a series of transactions, or video footage of warehouse operations—and automatically flag policy violations or anomalies? The traditional audit model is built on sampling, testing controls, and relying on human interpretation. A system that can passively watch and infer intent or identify non-compliance could upend this approach entirely.

If AI can spot that a certain behavior violates policy—without being explicitly told the rules—how would our role shift? And what questions should we ask as internal auditors: Is the AI making valid inferences? Are those inferences explainable and fair? Internal audit must develop criteria for trusting, reviewing, and challenging AI-generated alerts. Observation alone doesn't equal understanding—so we still need controls around interpretation, response, and accountability.

View the video:

Closing

Thank you for reading my digest on AI in Internal Audit. I hope these reflections spark further dialogue on how emerging technologies intersect with internal audit practices. Feel free to share your thoughts or share this with other via the link below!