What I read this week on AI - week 17

Articles on AI that caught my eye and can be linked back to internal audit

Last week, I read a range of insightful articles about AI, each shedding light on how this technology is reshaping various facets of our world. From the Bank of England’s concerns over AI-induced financial instability to the evolving job landscape highlighted by Forbes, the integration of AI into Google’s coding processes, advancements in AI model capabilities, and innovative uses of AI in cybersecurity—these developments are not only fascinating but also carry significant implications for internal audit professionals. As AI continues to permeate different sectors, it’s crucial for us to understand and adapt to these changes to ensure effective governance and risk management.

🚀 PS: Tomorrow at 08:30 CET I’ll share a deep-dive on audit analytics—watch your inbox!

Here’s what caught my attention last week:

Overview

AI-Induced Financial Instability: The Bank of England highlights risks where AI-driven trading could amplify market volatility and systemic shocks.

Jobs Most Vulnerable to AI: Roles involving repetitive tasks, such as data entry and basic analysis, are at high risk of automation.

AI Creates 25% of New Code at Google: Over a quarter of new code at Google is now generated by AI and reviewed by engineers.

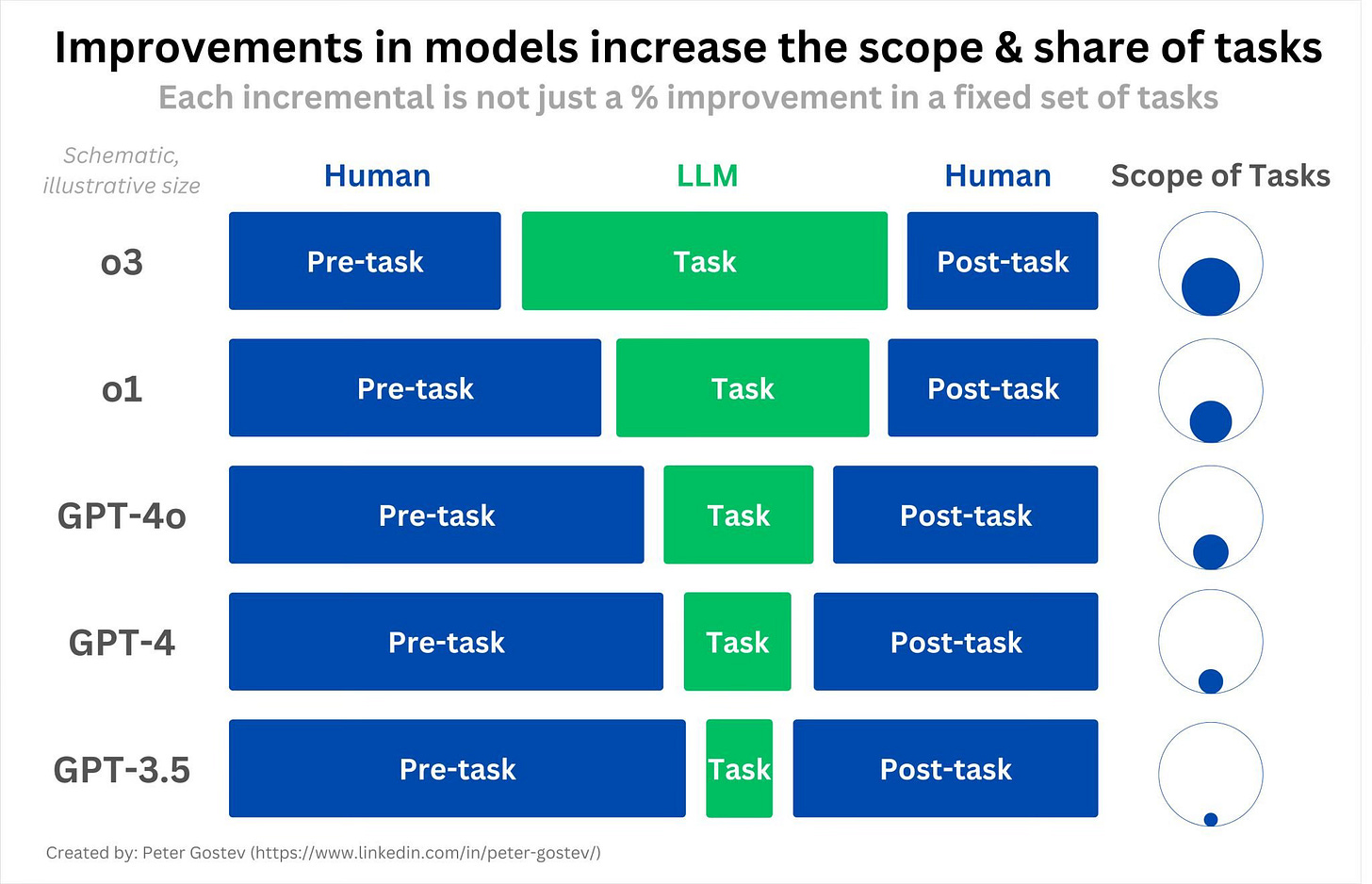

New AI Models Reduce Human Preparation: A tweet highlights how newer AI models like o3 and o4-mini, with integrated tool use, can handle complex tasks, reducing the need for human preparation.

Scamming Scammers with AI: A creative use of AI showcases how it can be employed to counteract online scams, turning the tables on fraudsters.

Bank of England Warns of AI-Induced Financial Instability

The Bank of England’s recent report raises concerns about AI’s potential to destabilize financial markets. It warns that AI-driven trading algorithms could lead to herd behavior, amplifying market shocks and increasing systemic risks. The report also highlights the dangers of over-reliance on a few AI providers, which could result in widespread vulnerabilities.

Reflection:

For internal auditors, this underscores the importance of assessing AI-related risks within financial institutions. Auditors should evaluate the robustness of AI models, the diversity of AI providers, and the potential for systemic risks arising from AI-driven decision-making.

Read more:

Financial Stability in Focus: Artificial intelligence in the financial system

Bank of England says AI software could create market crisis for profit

Forbes Lists Jobs Most Vulnerable to AI Disruption

According to Forbes, jobs involving repetitive tasks—such as data entry, basic analysis, and routine administrative functions—are most susceptible to AI-driven automation. Conversely, roles requiring complex problem-solving and human interaction are less likely to be affected in the near term.

Reflection:

Internal audit functions that rely heavily on routine tasks should proactively seek opportunities to integrate AI, allowing auditors to focus on more strategic activities. This shift necessitates upskilling and a reevaluation of audit methodologies to remain relevant and effective.

Read more:

The Jobs That Will Fall First As AI Takes Over The Workplace

AI Now Generates Over 25% of New Code at Google

In a significant shift, Google CEO Sundar Pichai announced during the Q3 2024 earnings call that more than a quarter of all new code at Google is now generated by AI, subsequently reviewed and accepted by engineers. This development underscores the growing role of AI in software development, enhancing productivity and efficiency within the company.

Reflection:

This advancement signals a transformative period in software development practices. For internal auditors, it raises considerations around the governance of AI-generated code, the integrity of software development processes, and the need to adapt audit methodologies to address the integration of AI in coding activities.

Read more:

Google CEO says over 25% of new Google code is generated by AI

Google Q3 earnings call: CEO’s remarks

Advancements in AI Model Capabilities Expand Task Delegation

A recent tweet explained how newer AI models like o3 and o4-mini are not just better in benchmarks but significantly expand the scope of tasks they can handle. With GPT-3.5 and GPT-4, humans still handled much of the work. With GPT-4o, o1, and especially o3, models increasingly manage entire task flows with minimal human preparation, thanks to integrated tool use.

Reflection:

Internal audit should rethink what “delegation” to AI really means. As AI models become capable of handling pre-task setup, execution, and even post-task processing, auditors will need to evaluate AI’s role not just as a tool, but as an operational participant—raising new questions around accountability, quality assurance, and segregation of duties.

Read more:

https://x.com/koltregaskes/status/1916178409094582381

YouTube Video Demonstrates AI’s Role in Scamming Scammers

A YouTube video showcases an innovative application of AI, where a bot army is used to scam scammers, effectively turning their tactics against them. This creative use of AI highlights its potential in cybersecurity and fraud prevention.

Reflection:

This example illustrates the dual-use nature of AI technologies. Internal auditors should assess both the defensive and offensive capabilities of AI within their organizations, ensuring that AI tools are used responsibly and effectively to mitigate risks and enhance security.

View the video:

Closing

Thank you for reading my weekly digest on AI in Internal Audit. I hope these reflections spark further dialogue on how emerging technologies intersect with internal audit practices. Feel free to share your thoughts or share this with other via the link below!